Rclone backup continued - Part 2

February 8, 2021 | StackThis is a continuation of "rclone setup..", so the configuration and setting up a of rclone and "Remotes" can be found there..

Operating with rclone you have two options, a GUI called RcloneBrowser and the console. Rclone has a very good community, documentation (very easy to navigate and understand) and command overview, so depending on what you are going to use rclone for - from a few commands to scripting - it should be fairly easy from rclone. There is also people who already made some nice scripts, I'll look at one of them called rclone_jobber found on GitHub written by Wolfram Volpi.

As the purpose of my Raspberry Pi setup is to operate remotely, and the intent is not to backup the client it self (a Raspberry Pi) or backup to the Pi it self. The following command will list what remotes we have available:

rclone listremotesIf you want to list the directories on your "remote", the following command would do just that:

rclone lsd remote:path- ls (list path and size of any objects also sub directories)

- lsl (more details than above)

Crypt

A crypt is an encrypted directory in rclone. In the following I'll try to make a crypt remote to encrypt files on a remote cloud storage (As you probably have noticed by that last 10 years of debate, storage at the cloud providers is currently not "private" and we don't want config files and passwords unencrypted at these providers). So:

rclone config- Choose -> n (New Remote)

- Name -> OneDrive_Encrypt (could be anything)

- Type choose -> "crypt"

- now (supposed you allready have a remote for OneDrive) you will enter the path for your new encrypted dir -> OneDrive:Encrypt

- How to encrypt, is a bit up to you. I'll just follow the "standard" -> 1

- Encrypt directory names -> 1 (unless you choose not to encrypt filenames)

- Password (never loose this one)

- Salt depending on you password strength you could also choose a salt. (and save this as well).

- Advanced config -> n (No)

- Confirm -> y

- Exit config -> q

Now for the exciting part, lets test this out for our content in the Document directory:

rclone copy /home/pi/Documents/ OneDrive_Encrypt:(and then)

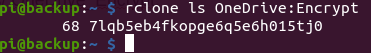

rclone ls OneDrive:Encryptwhich should give you something like this:

and then list thru your OneDrive_Encrypt

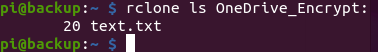

rclone ls OneDrive_Encrypt:Which would show you the decrypted content:

A very nice feature that one would have to know about rclone is the dry-run function, which will simulate an execution. So, you would be able to se the result without efffect. ex.:

rclone --dry-run -v --min-size 100M delete remote:pathBackup scripts

As mentioned in the beginning a guy called Wolfram Volpi has already made a great script, that can be used and with a lot of examples included at GitHub: https://github.com/wolfv6/rclone_jobber. In the following I'll basically just be modifying his rclone_jobber.sh script for my need.

There are multiple ways of doing backups. It could be a kind of revisioning/versioning (incremental backup), so you can go back in time and fetch a document the way it was three days ago (sync) or it could be a full copy of a directory (clone).

The following files can be found in this GitHub repository https://github.com/rune1979/rclone-script-templates and consists of three generic templats. In the /examples folder there is examples of how to use the scripts.

Sync

All of the following scripts should stay as they are, and then you make small scripts for each backup job to call them. You can find example scripts here: https://github.com/rune1979/rclone-script-templates/tree/master/examples

Let's copy the rclone_jobber.sh and modify it a bit. The first will be a sync script, which carries all changes for the last 30 days (or what ever you choose). So, the sync.sh will be very similar to the rclone_jobber.sh original. It can be found on GitHub: https://github.com/rune1979/rclone-script-templates/blob/master/sync.sh the mail functionality was setup here https://thehotelhero.com/mail-sending-from-a-raspberry-pi. You might want to pull the latest version from GitHub, instead of the following.

#!/bin/bash

#This script is based on the rclone_jobber.sh at https://github.com/wolfv6/rclone_jobber

################################# parameters #################################

source="$1" #the directory to back up (without a trailing slash)

dest="$2" #the directory to back up to (without a trailing slash or "last_snapshot") destination=$dest/last_snapshot

job_name="$3" #job_name="$(basename $0)"

retention="$4" # How many days do you want to retain old files for

options="$5" #rclone options like "--filter-from=filter_patterns --checksum --log-level="INFO" --dry-run"

#do not put these in options: --backup-dir, --suffix, --log-file

email="$6" # the admin email

################################ other variables ###############################

# Be carefull if you change some of the below.

# $new is the directory name of the current snapshot

# $timestamp is time that old file was moved out of new (not time that file was copied from source)

new="last_snapshot"

timestamp="$(date +%F_%H%M%S)" #time w/o colons if thumb drive is FAT format, which does not allow colons in file name

# set log_file path

#path="$(realpath "$0")" #this will place log in the same directory as this script

path="$PWD"

log_file="${path}/all_backup_logs.log" #replace path extension with "log"

#log_file="${path%.*}.log" #replace path extension with "log"

#log_file="/var/log/rclone_jobber.log" #for Logrotate

# set log_option for rclone

log_option="--log-file=$log_file" #log to log_file

#log_option="--syslog" #log to systemd journal

################################## functions #################################

send_to_log()

{

msg="$1"

# set log - send msg to log

echo "$msg" >> "$log_file" #log msg to log_file

#printf "$msg" | systemd-cat -t RCLONE_JOBBER -p info #log msg to systemd journal

}

send_mail()

{

if [[ ! -z "$email" ]];then

msg="$1"

/usr/sbin/sendmail -i -- $email <<EOF

Subject: Backup Urgency - $job_name

$msg

EOF

fi

}

# print message to echo, log, and popup

print_message()

{

urgency="$1"

msg="$2"

message="${urgency}: $job_name $msg"

echo "$message"

send_to_log "$(date +%F_%T) $message"

send_mail "$msg"

}

# confirmation and logging

conf_logging() {

exit_code="$1"

if [ "$exit_code" -eq 0 ]; then #if no errors

confirmation="$(date +%F_%T) completed $job_name"

echo "$confirmation"

send_to_log "$confirmation"

send_to_log ""

else

print_message "ERROR" "failed. rclone exit_code=$exit_code"

send_to_log ""

exit 1

fi

}

################################# range checks ################################

# if source is empty string

if [ -z "$source" ]; then

print_message "ERROR" "aborted because source is empty string."

exit 1

fi

# if dest is empty string

if [ -z "$dest" ]; then

print_message "ERROR" "aborted because dest is empty string."

exit 1

fi

# if source is empty

if ! test "rclone lsf --max-depth 1 $source"; then # rclone lsf requires rclone 1.40 or later

print_message "ERROR" "aborted because source is empty."

exit 1

fi

# if job is already running (maybe previous run didn't finish)

# https://github.com/wolfv6/rclone_jobber/pull/9 said this is not working in macOS

if pidof -o $PPID -x "$job_name"; then

print_message "WARNING" "aborted because it is already running."

exit 1

fi

############################### move_old_files_to #############################

backup_dir="--backup-dir=$dest/sync_archive/$timestamp"

################################### back up ##################################

cmd="rclone sync $source $dest/$new $backup_dir $log_option $options"

# progress message

echo "Back up in progress $timestamp $job_name"

echo "$cmd"

# set logging to verbose

#send_to_log "$timestamp $job_name"

#send_to_log "$cmd"

eval $cmd

exit_code=$?

conf_logging "$exit_code"

################################### clean up old function ##################################

delete_dir() {

dir="$1"

cmd_delete="rclone purge $dest/sync_archive/$dir $log_option $options" # you might want to dry-run this.

echo "Removing old synced files $dir $job_name"

echo "$cmd_delete"

eval $cmd_delete

exit_code=$?

if ! [ $exit_code == 3 ]; then # We don't want any alerts on 3 (no directories found)

conf_logging "$exit_code"

fi

}

CMD_LSD="rclone lsd --max-depth 1 $dest/sync_archive/"

mapfile -t dir_array < <(eval $CMD_LSD)

DATE=$(date -d "$now - $retention days" +'%Y-%m-%d')

for i in "${!dir_array[@]}"; do

dir_path="${dir_array[i]}"

dir_date=$(echo ${dir_path##* })

dir_date2=$(echo ${dir_date%_*})

conv_date=$(date -d "$dir_date2" +'%Y-%m-%d')

if [[ $conv_date < $DATE ]];then

delete_dir "$dir_date"

fi

done

exit 0The above script is not nessesarily a good substitution for a full backup. But, it will let you go back in time (as long as the retention is set) and delete everything older. Depending on how much activity there is on the original filesystem, you could increase decrease the retention time.

The above script is fitted to the purpose, in a setting where you might want to retrive a document in an earlier stage. And incremental backup don't put a heavy load on the hardware and network.

Recover a file

As the above script makes is possible to retrive individual files for a certain extend. I have made a retreival script https://github.com/rune1979/rclone-script-templates/blob/master/restore_from_sync_archive.sh this will make it possible to recover a single file (in case of corruption or mistakes or deletion) back to it's origin.

#!/usr/bin/env sh

#restore a file to it's original location

#################### PARAMETERS ####################

#source (backup location) and destination (original location) paths

list_source="$1"

pre_dest="$2"

job_name="$3"

opitons="$4"

#################### SCRIPT ####################

for entry in "$list_source"/*

do

echo "$entry"

done

echo "\n>>>>>>>>>>>>>> Enter the name of the file to recover (*some_file.txt) <<<<<<<<<<<<<<<< "

read file

rclone ls --include $file $list_source

echo "\n>>>>>>>>>>>>>> Copy the above path for the file to recover <<<<<<<<<<<<<<<<<"

read old

source="$list_source/$old"

get_old_date="${old%%/*}"

rm_time_dir="${old#*/}"

dest="$pre_dest/$rm_time_dir-$get_old_date"

echo "\n copying from: $source"

echo "\n to: $dest"

printf "\n*** restoring old file in new dated directory ***\n"

rclone copy $source $dest $optionsBackup

If you would like to make a complete backup, from time to time. Lets say a copy the whole "last_snapshot" and save it with a timestamp. The following script will make a full backup every Xth day in the month. We will also try to limit the amount of old backups to something like: the xth for the last 3 month, then delete old backups if they are older than three month, but keep month ex. 1st or 7th month of the year and only delete those when older than XXX days.

Ideely we would compress the full backup copy, to limit storage consumption. There are no "one" simple way to this, but three ways that are not perfect.

- Rclone have an experimental feature called "compress", it is really experimental and it has a lot of draw backs. And it is recommended NOT to use this feature unless you know exactly what it involves.

- Download the "last_snapshot" to your Pi backup server, compress and rename the compressed file and upload it again to the remote and then remove the compressed file and "last_snapshot" from the Pi. The problem here is that this takes up alot of additional bandwidth, and takes up a lot of storage on the Pi. Let's just assume you have a "last_snapshot" of 280GB + the compressed file.

- The last and probably the best solution. Make a compressed file on the client side (the machine that is backed up) and download that on a sheduled basis.

The following backups script does therefore not compress.

#!/bin/bash

################# PARAMETERS ################

# WARNING! This script has not been fully tested, so keep an eye on it.

# source and destination usually is something like remote:path instead of the below local paths

source="$1"

dest="$2"

date_for_backup="$3" #two digit number ex. 01 for the first in each month to run the script

del_after="$4" # Will will delete everything older than x days in bckp ex. 30

keep_mnt="$5" # Keep these backup months in the old_dir ex. 01 or 01,04,07,10(comma seperated)

del_all_after="$6" # Will delete everything older than x days in old_dir ex. 365

job_name="$7"

option="$8" # optinal rclone hooks ex. --dry-run

email="$9" # To send allerts

################# SET VARS ##############

# Be carefull to double check the whole script if you change the below variables

bckp="month_backup" # dir to stor monthly backups

old_dir="old_backups" # dir where to retain the old backups

path="$PWD"

log_file="${path}/all_backup_logs.log"

log_option="--log-file=$log_file" # rclone log to log_file

################# FUNCTIONS #################

send_to_log()

{

msg="$1"

# set log - send msg to log

echo "$msg" >> "$log_file" #log msg to log_file

#printf "$msg" | systemd-cat -t RCLONE_JOBBER -p info #log msg to systemd journal

}

send_mail()

{

if [[ ! -z "$email" ]];then

msg="$1"

/usr/sbin/sendmail -i -- $email <<EOF

Subject: Backup Urgency - $job_name

$msg

EOF

fi

}

print_message()

{

urgency="$1"

msg="$2"

message="${urgency}: $job_name $msg"

echo "$message"

send_to_log "$(date +%F_%T) $message"

send_mail "$msg"

}

conf_logging()

{

exit_code="$1"

if [ "$exit_code" -eq 0 ]; then #if no errors

confirmation="$(date +%F_%T) completed $bckp"

echo "$confirmation"

send_to_log "$confirmation"

send_to_log ""

else

print_message "ERROR" "failed. rclone exit_code=$exit_code"

send_to_log ""

exit 1

fi

}

delete_dir() {

dir="$1"

archive="$2"

if [ $archive == "old" ];then

cmd_delete="rclone purge $dest/$old_dir/$dir $log_option $options" # you might want to dry-run this.

else

cmd_delete="rclone purge $dest/$bckp/$dir $log_option $options" # you might want to dry-run this.

fi

echo "Removing old archive backups $dir $job_name"

echo "$cmd_delete"

eval $cmd_delete

exit_code=$?

if ! [ $exit_code == 3 ]; then # We don't want any alerts on 3 (no directories found)

conf_logging "$exit_code"

fi

}

################# SCRIPT ################

log_option="--log-file=$log_file"

ifStart=`date '+%d'`

month=`date '+%m'`

timestamp="$(date +%F_%H%M%S)"

if [ $ifStart == $date_for_backup ]; then

if echo "$keep_mnt" | grep -q "$month"; then

cmd="rclone copy $source $dest/$old_dir/$timestamp $log_option"

echo "$cmd"

eval $cmd

exit_code=$?

conf_logging "$exit_code"

CMD_LSD="rclone lsd --max-depth 1 $dest/$old_dir/"

mapfile -t dir_array < <(eval $CMD_LSD)

DATE=$(date -d "$now - $del_all_after days" +'%Y-%m-%d')

for i in "${!dir_array[@]}"; do

dir_path="${dir_array[i]}"

dir_date=$(echo ${dir_path##* })

dir_date2=$(echo ${dir_date%_*})

conv_date=$(date -d "$dir_date2" +'%Y-%m-%d')

if [[ $conv_date < $DATE ]];then

delete_dir "$dir_date" "old"

fi

done

else

cmd="rclone copy $source $dest/$bckp/$timestamp $log_option"

echo "$cmd"

eval $cmd

exit_code=$?

conf_logging "$exit_code"

CMD_LSD="rclone lsd --max-depth 1 $dest/$bckp/"

mapfile -t dir_array < <(eval $CMD_LSD)

DATE=$(date -d "$now - $del_after days" +'%Y-%m-%d')

for i in "${!dir_array[@]}"; do

dir_path="${dir_array[i]}"

dir_date=$(echo ${dir_path##* })

dir_date2=$(echo ${dir_date%_*})

conv_date=$(date -d "$dir_date2" +'%Y-%m-%d')

if [[ $conv_date < $DATE ]];then

delete_dir "$dir_date" "mnt"

fi

done

fi

fi

exit 0Rclone continued III - Two years later here..