Wazuh single node on Swarm

August 6, 2021 | ClusterAs private user and a Open Source advocate. There is only a couple of SIEM systems to choose from, one of them is an offspring of the probably most known of them all OSSEC, this system is called Wazuh. One of the great things about Wazuh is the abillity to deploy a scalable container setup. In the following i will try to set Wazuh up on a one node Swarm cluster, it is of cause not ideal in a production environment where you would want HA. But the following setup don't need much change to scale out on multiple nodes (refer to the "production-cluster.yml"). This is more like a family setup with a single 8gb ram node (as i recall it is recommended to have at least 4gb memory availible).

WARNING: In general (commercial use) you would not use public hosting providers/datacenters for a security setup like this, and you might choose to deploy a SIEM system on perimitter (you own servers where only you have access). But, when talking about private setup it is often more convienient to rent a VPS online. I would suggest to go at least with a european provider, with no ties to the US or China and maybe even take it a step further and choosing a Swiss or Islantic provider with independent security audits. You don't want anyone looking thru all of your logs from all of your devices (and to some extend getting access to them)!

Server settings

Set the vm.max_map_count to 262144:

sysctl -w -p vm.max_map_count=262144Wazuh docker repository

Start by cloning the latest version of the Git-Hub "wazuh/wazuh-docker" repository.

git clone https://github.com/wazuh/wazuh-docker.gitBasically there are two compose files. A demo/single node "docker-compose.yml" and a production ready "production-cluster.yml".

As I am going for a single node setup, I'll be using the docker-compose.yml and make some changes to the file.

After some editing the docker-compose.yml should look something like this:

# Wazuh App Copyright (C) 2021 Wazuh Inc. (License GPLv2)

version: '3.7'

services:

wazuh:

image: wazuh/wazuh-odfe:4.1.5

hostname: wazuh-manager

restart: always

ports:

- "1514:1514"

- "1515:1515"

- "514:514/udp"

- "55000:55000"

environment:

- ELASTICSEARCH_URL=https://elasticsearch.YOUR-DOMAIN.com:9200

- ELASTIC_USERNAME=YOUR_USERNAME

- ELASTIC_PASSWORD=YOUR_PASSWORD

- FILEBEAT_SSL_VERIFICATION_MODE=none

volumes:

- ossec_api_configuration:/var/ossec/api/configuration

- ossec_etc:/var/ossec/etc

- ossec_logs:/var/ossec/logs

- ossec_queue:/var/ossec/queue

- ossec_var_multigroups:/var/ossec/var/multigroups

- ossec_integrations:/var/ossec/integrations

- ossec_active_response:/var/ossec/active-response/bin

- ossec_agentless:/var/ossec/agentless

- ossec_wodles:/var/ossec/wodles

- filebeat_etc:/etc/filebeat

- filebeat_var:/var/lib/filebeat

elasticsearch:

image: amazon/opendistro-for-elasticsearch:1.13.0

hostname: elasticsearch

restart: always

ports:

- "9200:9200"

environment:

- discovery.type=single-node

- cluster.name=wazuh-cluster

- network.host=0.0.0.0

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- bootstrap.memory_lock=true

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- ./production_cluster/elastic_opendistro/internal_users.yml:/usr/share/elasticsearch/plugins/opendistro_security/securityconfig/internal_users.yml

kibana:

image: wazuh/wazuh-kibana-odfe:4.1.5

hostname: kibana

restart: always

ports:

- 5601:5601

environment:

- ELASTICSEARCH_USERNAME=YOUR_USERNAME

- ELASTICSEARCH_PASSWORD=YOUR_PASSWORD

- SERVER_SSL_ENABLED=true

# - SERVER_SSL_CERTIFICATE=/usr/share/kibana/config/opendistroforelasticsearch.example.org.cert

# - SERVER_SSL_KEY=/usr/share/kibana/config/opendistroforelasticsearch.example.org.key

- SERVER_SSL_CERTIFICATE=/usr/share/kibana/config/cert.pem

- SERVER_SSL_KEY=/usr/share/kibana/config/key.pem

volumes:

- ./production_cluster/kibana_ssl/cert.pem:/usr/share/kibana/config/cert.pem

- ./production_cluster/kibana_ssl/key.pem:/usr/share/kibana/config/key.pem

depends_on:

- elasticsearch

links:

- elasticsearch:elasticsearch

- wazuh:wazuh

volumes:

ossec_api_configuration:

ossec_etc:

ossec_logs:

ossec_queue:

ossec_var_multigroups:

ossec_integrations:

ossec_active_response:

ossec_agentless:

ossec_wodles:

filebeat_etc:

filebeat_var:Generating all the certificates

Generate the certificate for Kibana by executing the following bash script, located in the wazuh-docker/production_cluster/kibana_ssl/ dir.

bash ./generate-self-signed-cert.shIn a multi-node setup generating certificates for Filebeat and each Elasticsearch node is nessesary.

Changing passwords

To generate the hashed password for Elasticsearch run the following docker container:

docker run --rm -ti amazon/opendistro-for-elasticsearch:1.13.2 bash /usr/share/elasticsearch/plugins/opendistro_security/tools/hash.shAfter you have interred you passphrase, copy the hash value and paste it in the "wazuh-docker/production_cluster/elastic_opendistro/internal_users.yml" under the admin and change username and put in the hash:

YOUR_USER:

hash: "YOUR_HASH_HERE"

reserved: true

backend_roles:

- "admin"

description: "Admin user"

And remember to go back into "docker-compose.yml" and change the admin user credidentials (in Wazuh and Kibana service for Elasticsearch access).

And run the stack:

docker stack deploy -c docker-compose.yml wazuhAnd everything should be up and running (read further).

Due to licensing issues

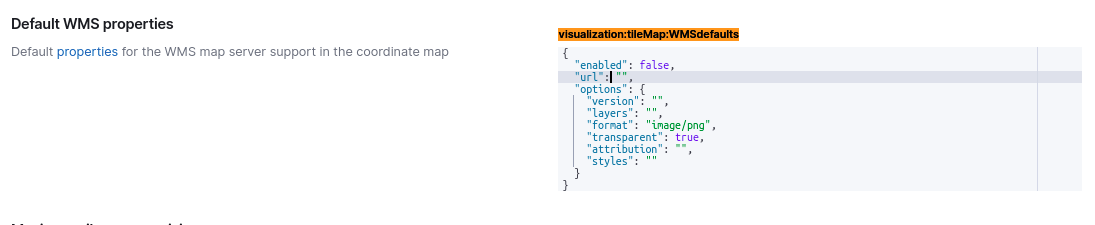

Elasticsearch were bought up by Amazon, not long ago. There is luckily still a Open Source Distro available, "Open Distro for Elasticsearch". But, ex. the map tile server is not available in Kibana any more, so you have to provide your own.

Go to (menu): Stack Management (in the bottum) -> Advanced Settings, and finde the section "Default WMS properties":

And add your own service here..

Trouble shooting

Usually the trouble arrives on the certificate part.

- First try to clean up old wazuh volumes, if they exist.

- Secondly, look at the error messages (typically on the elasticsearch container)

- Have a look at the "certs.yml" file.